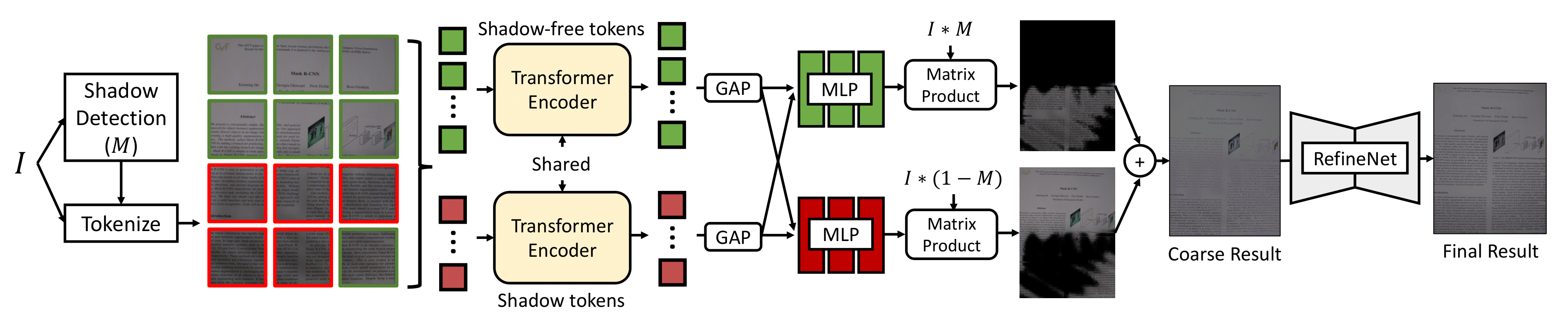

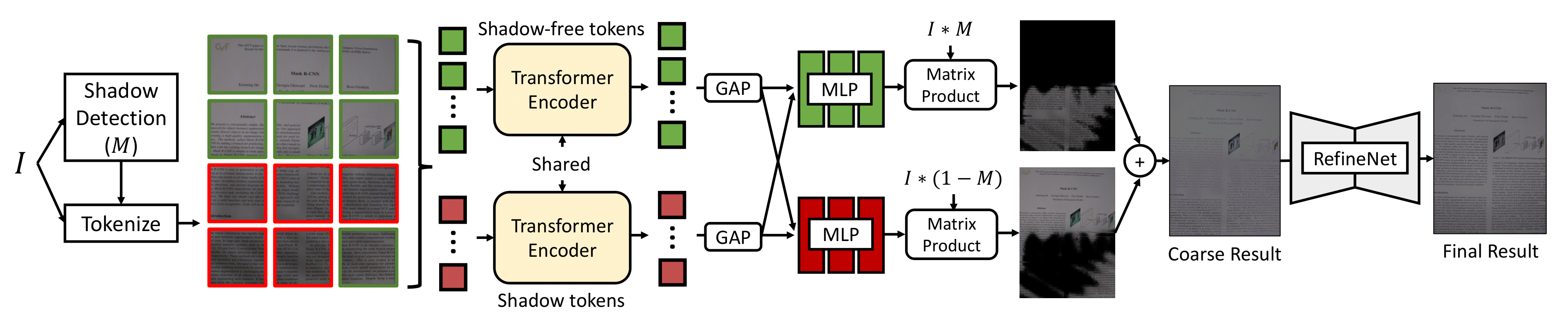

Method Overview

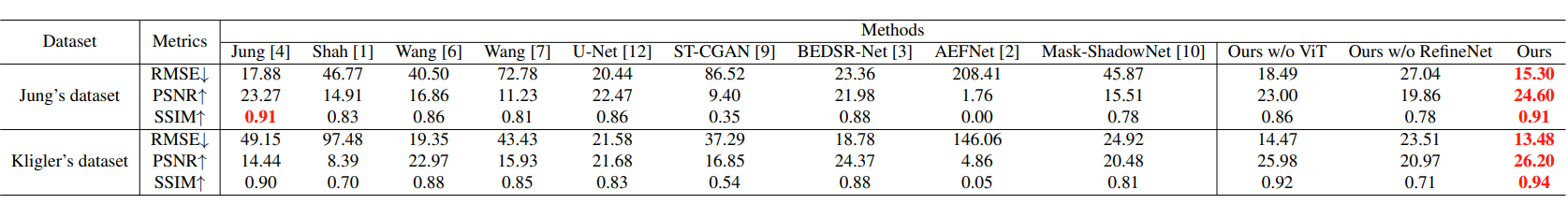

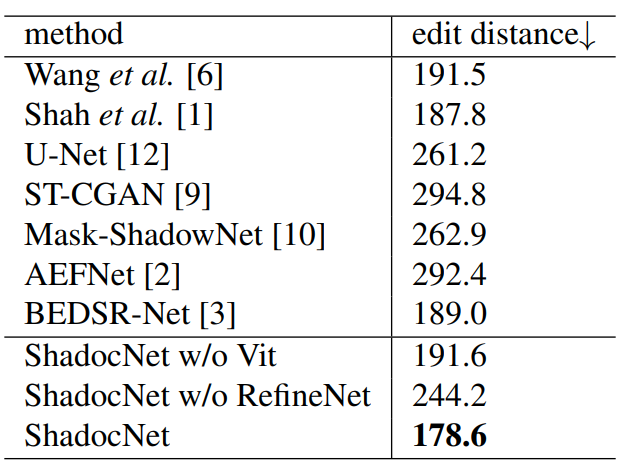

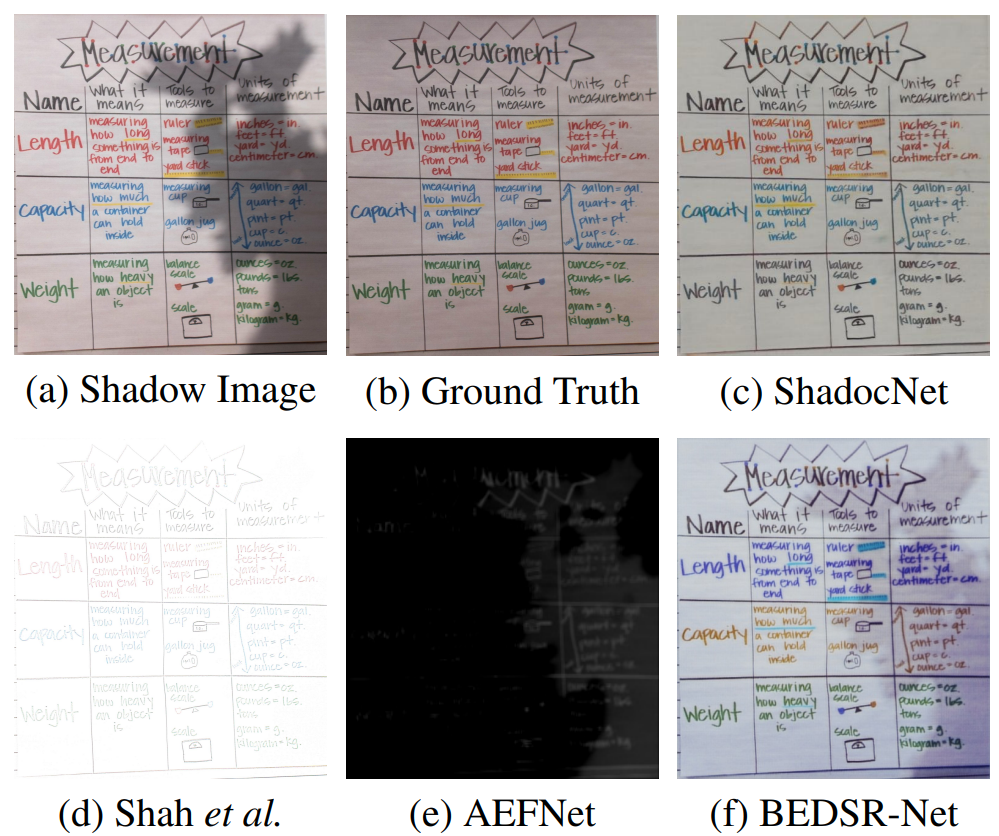

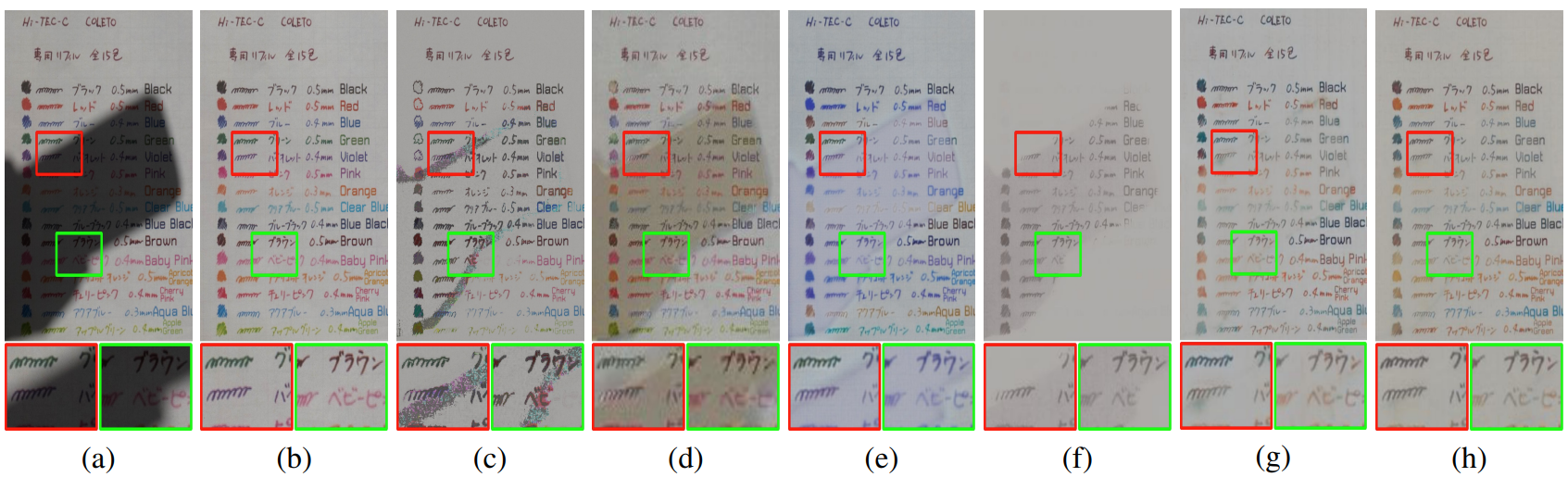

Shadow removal improves the visual quality and legibility of digital copies of documents. However, document shadow removal remains an unresolved subject. Traditional techniques rely on heuristics that vary from situation to situation. Given the quality and quantity of current public datasets, the majority of neural network models are ill-equipped for this task. In this paper, we propose a Transformer-based model for document shadow removal that utilizes shadow context encoding and decoding in both shadow and shadow-free regions. Additionally, shadow detection and pixel-level enhancement are included in the whole coarse-to-fine process. On the basis of comprehensive benchmark evaluations, it is competitive with state-of-the-art methods.

@inproceedings{chen2023shadocnet,

title={Shadocnet: Learning Spatial-Aware Tokens in Transformer for Document Shadow Removal},

author={Chen, Xuhang and Cun, Xiaodong and Pun, Chi-Man and Wang, Shuqiang},

booktitle={ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

pages={1--5},

year={2023},

organization={IEEE}

}

This work was supported in part by the University of Macau under Grant MYRG2022-00190-FST, in part by the Science and Technology Development Fund, Macau SAR, under Grant 0034/2019/AMJ, Grant 0087/2020/A2 and Grant 0049/2021/A, in part by the National Natural Science Foundations of China under Grants 62172403 and in part by the Distinguished Young Scholars Fund of Guangdong under Grant 2021B1515020019.